Adversaries Are Now Cloning High-End AI Reasoning

In mid-February 2026, Google Threat Intelligence Group confirmed a dangerous shift: attackers aren't just using AI tools; they are stealing the underlying logic to build their own unconstrained weapon

For the last year, the security community has asked, “When will adversaries build their own frontier models?” The answer, according to a new report from Google Threat Intelligence Group (GTIG), is that they don’t have to.

Instead, they are stealing them.

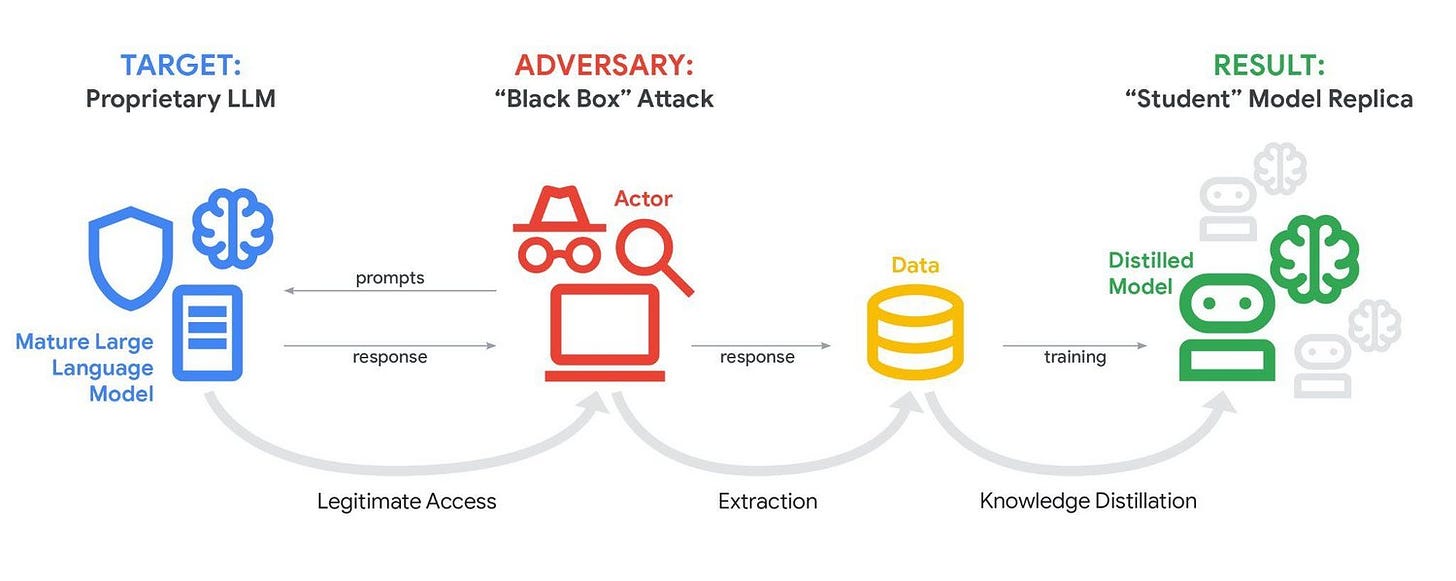

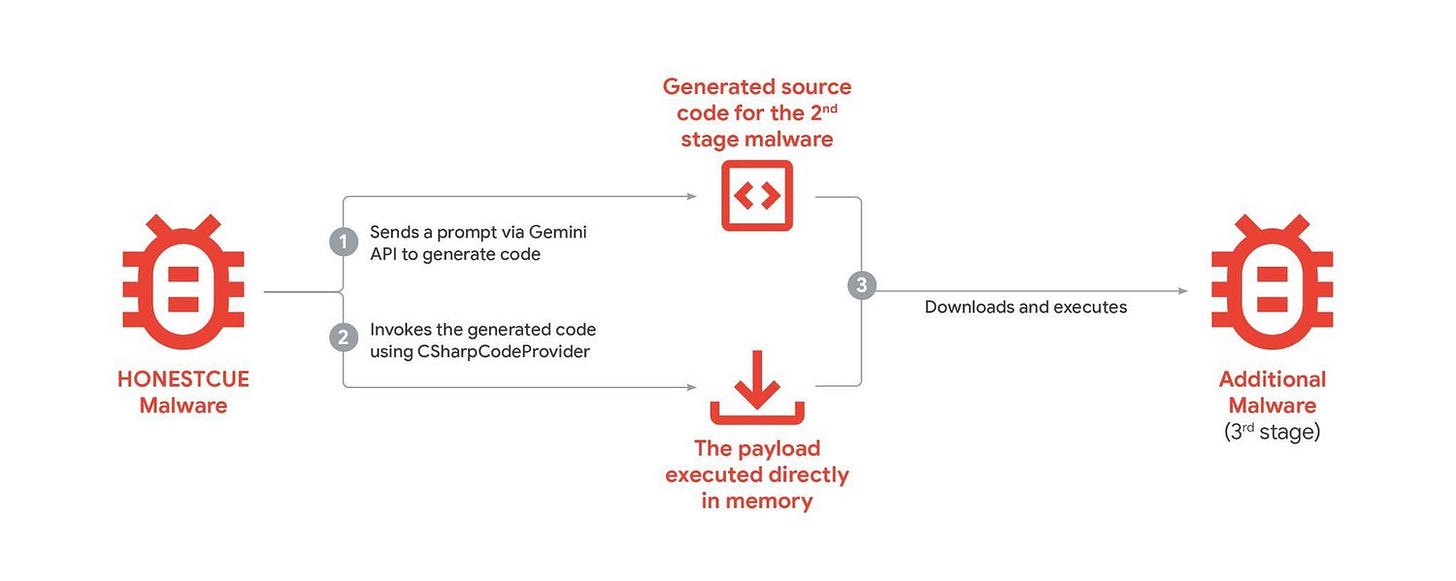

In a detailed analysis of late-2025 threat activity, Google revealed a surge in “Distillation Attacks.” State-sponsored actors and private entities are systematically probing mature models like Gemini to extract their reasoning patterns, effectively cloning the “mind” of a high-end AI into smaller, unconstrained local models.

This marks a critical pivot. The threat is no longer just “bad actors using ChatGPT.” It is bad actors exporting the capability of ChatGPT to run offline, without safety filters.

The Theft of Reasoning

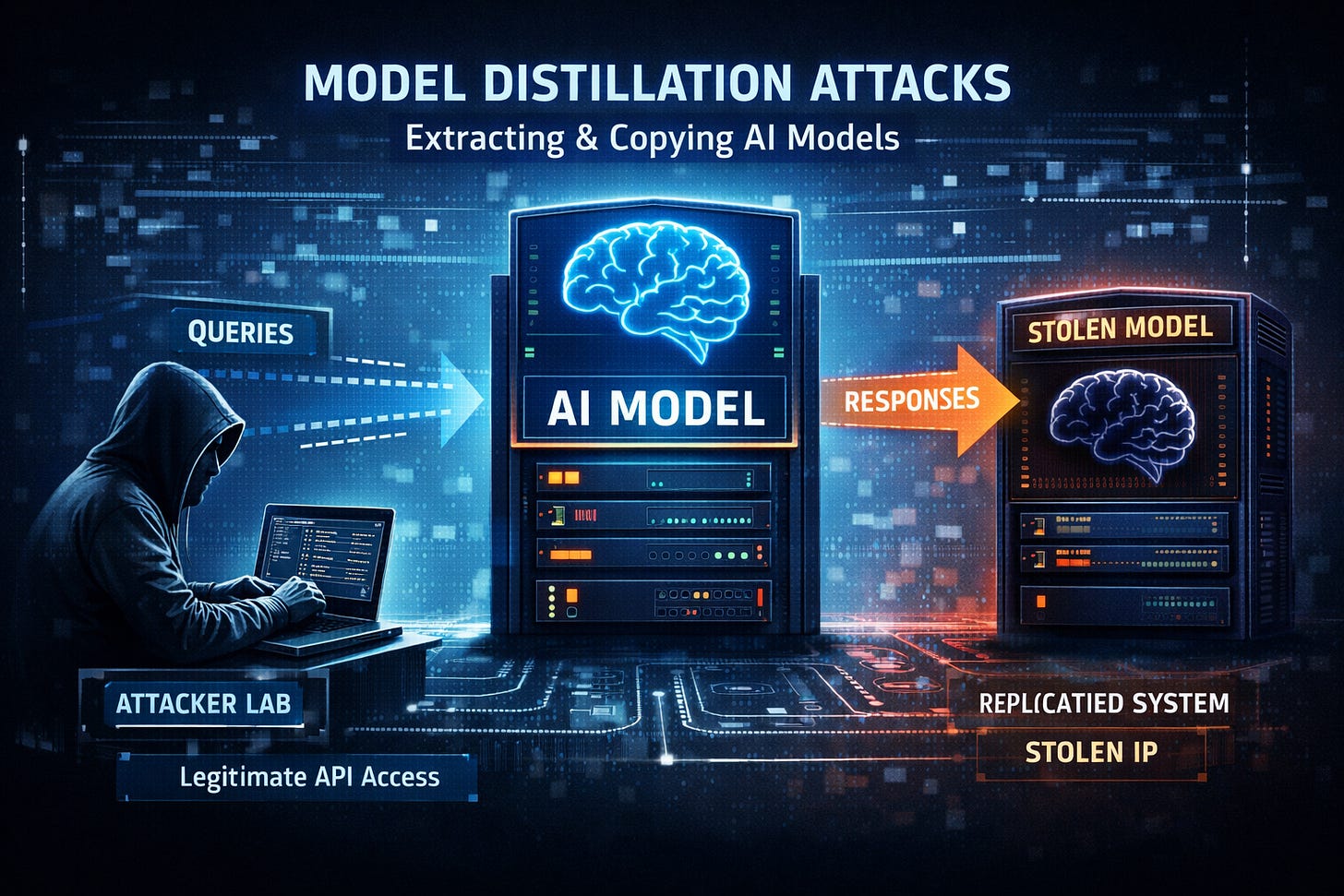

Model extraction, or distillation, is the defining trend of this report.

Adversaries know that training a frontier model costs billions. But querying a model to map its decision-making process costs pennies. By recording thousands of input-output pairs, attackers can train a “student” model that mimics the “teacher’s” capabilities.

This is intellectual property theft weaponized for cyber operations.

It allows adversaries to bypass the API restrictions and safety guardrails that companies like Google and OpenAI spend millions building. Once the reasoning capability is distilled, it can be repurposed for malware development or vulnerability research in an environment where no one is watching.

From Chatbots to “Agentic” Threats

The report also highlights a move toward Agentic AI—systems designed to act, not just talk.

GTIG observed groups like APT31 and UNC795 (PRC-based) moving beyond simple queries. They are building workflows where AI personas act as “expert” cybersecurity consultants.

UNC795 was seen attempting to build an AI-integrated code auditing tool, effectively automating the search for zero-day vulnerabilities.

APT31 used expert personas to generate targeted testing plans for SQL injection and Remote Code Execution (RCE).

This is the operational reality of 2026. The adversary is not just asking the AI to write a phishing email; they are asking it to audit the target’s architecture and suggest the most efficient kill chain.

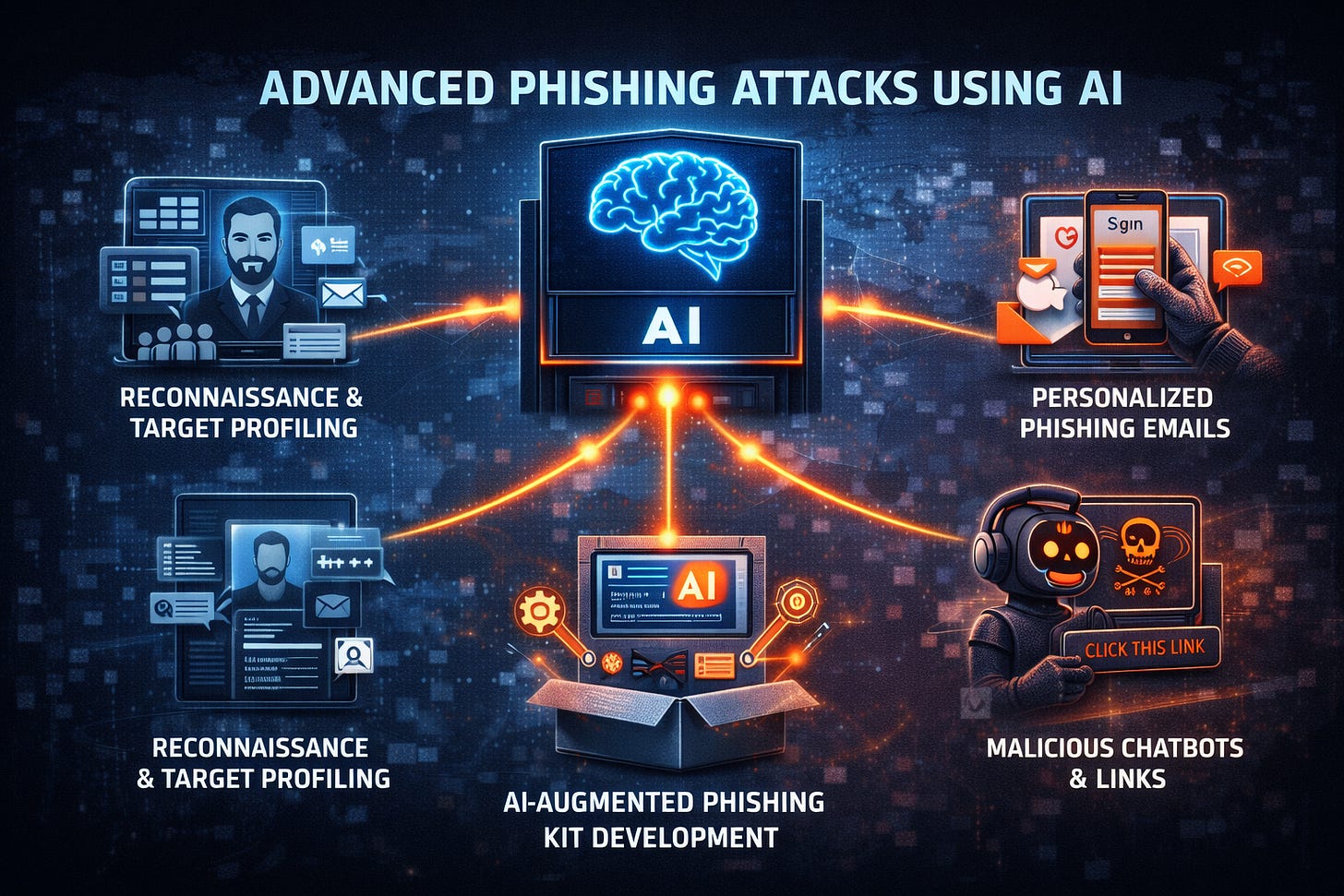

The “ClickFix” Trap: Weaponizing Trust

Perhaps the most insidious tactic detailed is the “ClickFix” social engineering technique.

Attackers are generating helpful, technical troubleshooting conversations and hosting them on legitimate, shared AI links (e.g., gemini.google.com/share/...).

The victim receives a link to a trusted Google domain. They see a conversation where an AI solves a complex error. The “solution” involves pasting a command into their terminal. Because the context is a helpful AI interaction on a trusted platform, the victim complies.

It is a supply chain attack on user trust. The malware payload is hidden in plain sight, validated by the hallucinated authority of the AI itself.

The Integration Phase

We have moved past the “experimentation” phase of adversarial AI.

Google’s report confirms that for groups primarily from the DPRK, Iran, and the PRC, AI is now an integrated component of the toolkit. It is used for translation, for code debugging, for reconnaissance, and for social engineering.

The underground economy is following suit. Services like “Xanthorox” claim to offer custom offensive models, though many are simply wrappers for jailbroken commercial APIs. The demand is there, and the market is responding.

The New Asymmetry

Defenders have long relied on the fact that high-end AI is centralized, monitored, and expensive. If adversaries can successfully distill that intelligence into portable, unmonitored models, that advantage evaporates.

We are entering an era where high-fidelity machine reasoning is a commodity available to the highest bidder, or the most patient thief.

Source: https://cloud.google.com/blog/topics/threat-intelligence/distillation-experimentation-integration-ai-adversarial-use