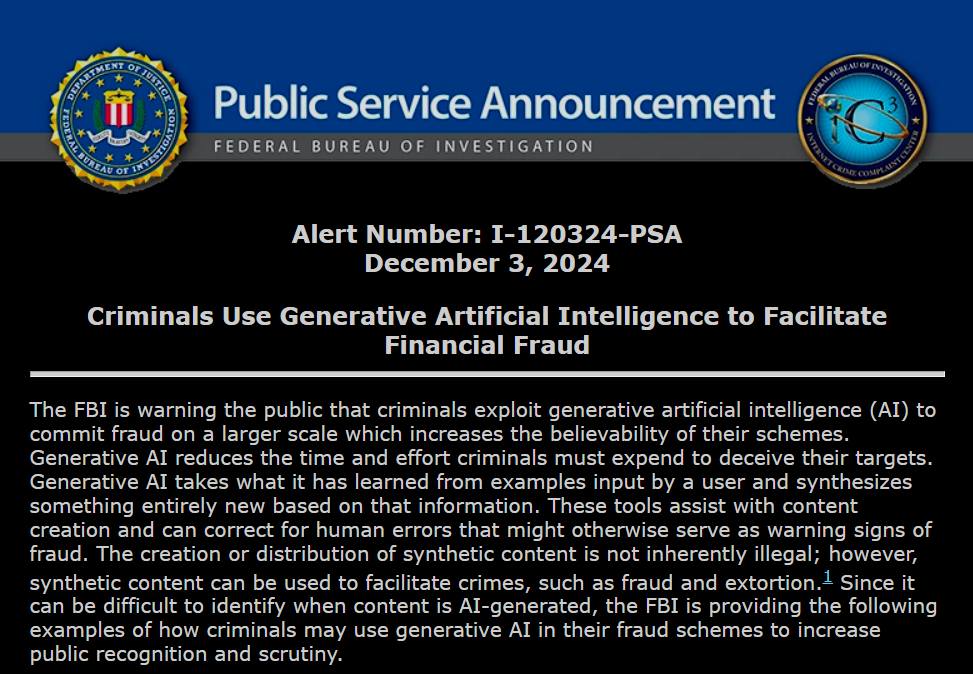

Criminals Exploit Generative AI for Financial Fraud

Imagine this: a voice that sounds exactly like your boss calls you, demanding an urgent wire transfer. Or, a video of a trusted CEO endorses a new investment opportunity that turns out to be a scam.

These aren’t just hypothetical scenarios—they’re the cutting edge of AI-powered fraud.

The FBI has sounded the alarm: cybercriminals are weaponizing generative AI to launch scams that are more convincing, widespread, and dangerous than ever before.

The New AI-Powered Scam Toolkit

Generative AI isn’t just about creating cool art or streamlining customer service. In the wrong hands, it’s a criminal goldmine:

AI-Generated Text: Flawless phishing emails, fake social media accounts, and fraudulent pitches with zero typos or red flags.

AI-Generated Audio: Voice cloning to mimic loved ones or executives for scams.

AI-Generated Videos: Deepfakes of public figures endorsing scams.

AI-Generated Images: Fake IDs, social media profiles, and even fake product listings.

Real-World Examples: When AI Goes Rogue

The “Fake CEO” Scam (Germany, 2019)

A deepfake voice was used to mimic a CEO’s voice, convincing a company executive to transfer over $240,000. The criminals used AI-powered voice synthesis to perfect the scam.Celebrity Endorsement Scams (Global)

Deepfake videos of Elon Musk and other tech leaders have been used in crypto investment fraud, fooling thousands into believing the endorsements were legitimate.“Family Emergency” Voice Scams (US, 2023)

Victims reported receiving calls from what sounded like their children or family members, pleading for financial help. AI tools cloned the voices from social media posts.Fake ID Fraud (UK, 2024)

A fraud ring used AI-generated fake IDs to rent properties, open bank accounts, and commit identity theft on a large scale.

How to Stay Safe

Verify Requests: Establish verification methods, like secret phrases, to confirm identities in emergencies.

Examine Media Closely: Look for telltale signs in deepfakes—unusual eye movements, distorted edges, or mismatched lighting.

Limit Online Exposure: Sharing too much personal information makes it easier for scammers to clone your identity.

Be Skeptical: If it feels too good (or bad) to be true, it probably is. Cross-check stories, especially those involving money or sensitive data.

What Can Be Done?

The rise of AI-powered scams isn’t just a tech problem—it’s a societal one. Governments, tech companies, and individuals need to work together to:

Develop stronger verification tools for digital interactions.

Increase public awareness of AI fraud risks.

Regulate and monitor the misuse of AI technologies.

Report Suspicious Activity

If you think you’ve encountered an AI-driven scam, report it to the FBI’s Internet Crime Complaint Center (IC3.gov) or your local authorities. Staying vigilant is key to combating these ever-evolving threats.