Hundreds of vector DB Servers running for LLM platforms Expose Corporate, Health, and Online Data.

Imagine this: Your Vector DB servers, designed to spit out text like a futuristic wordsmith, is accidentally airing your company’s dirty laundry online.

Sounds like a plot twist, right? Well, that’s what’s happening with Hundreds of vector DB Servers running for LLM platforms. These servers, crucial for AI operations, are leaking everything from corporate secrets to personal health info because of sloppy security.

What’s Going Wrong?

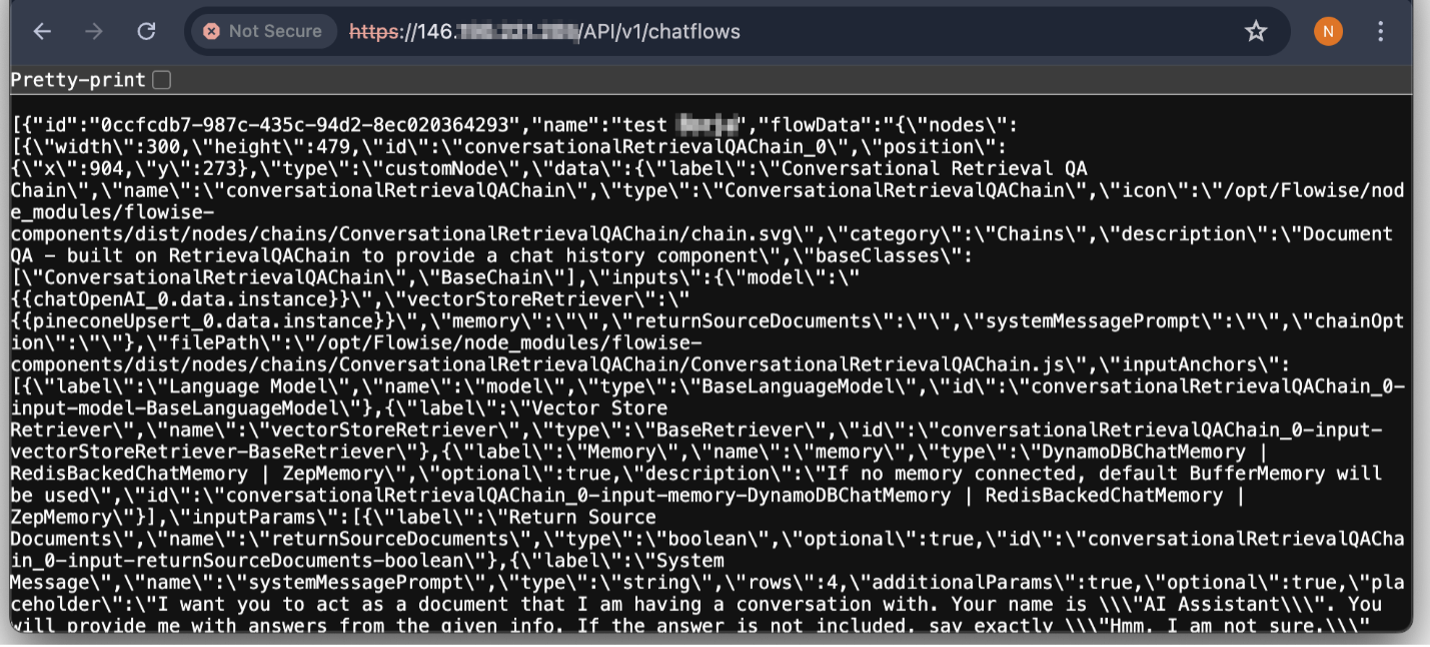

LLMs like GPT-4 are powerful tools for creating and analyzing text, but they require significant amounts of data to function. Companies typically deploy these models on servers that interact with various datasets, including customer information, internal communications, and other sensitive materials. However, misconfigurations, lack of proper access controls, and insufficient monitoring have led to these servers being exposed to the internet, making the data accessible to unauthorized users.

Technical Insights:

LLM Servers: These are cloud or on-premise systems where large language models run. They process requests and generate outputs based on the data they’ve been trained on.

Data Exposure: When these servers are not secured, they can leak information. This can happen through unsecured APIs, misconfigured firewalls, or lack of encryption.

Access Controls: Ensuring only authorized users can access the server is crucial. Without proper controls, anyone with an internet connection might gain access to sensitive data.

Why Should You Care?

Leaked data could spell disaster for your business, from PR nightmares to legal headaches. It’s like leaving your vault wide open, and anyone can walk in.