When AI Becomes the Vulnerability Hunter, Claude Opus 4.6 and the Acceleration of Software Risk

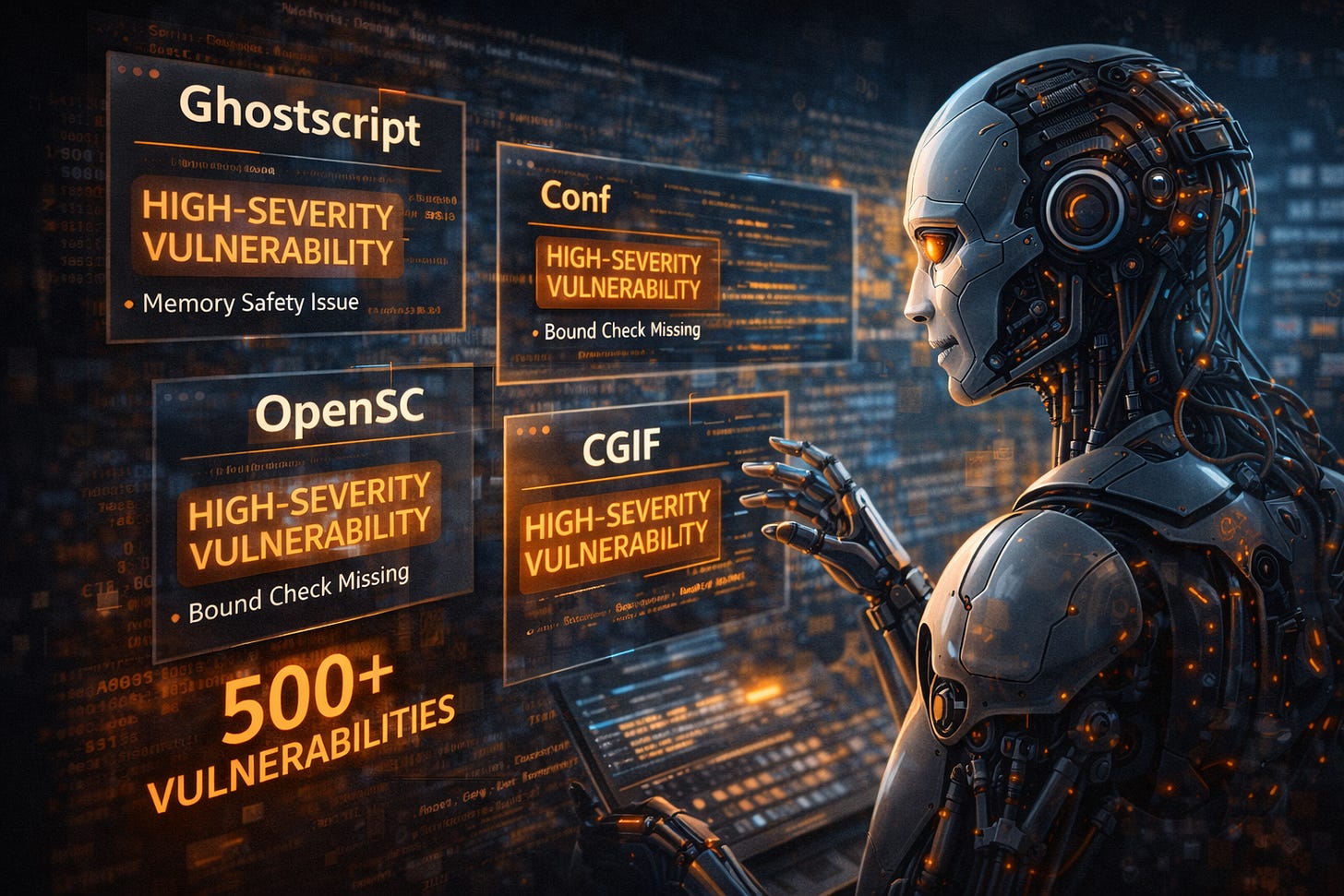

In early February 2026, Claude Opus 4.6 identified more than 500 high-severity vulnerabilities across widely used open-source libraries.

The rules of vulnerability discovery are changing faster than most security programs are prepared to absorb. In early February 2026, Claude Opus 4.6 identified more than 500 high-severity vulnerabilities across widely used open-source libraries, not through fuzzing at scale or signature matching, but through semantic reasoning over code itself.

This was not a red-team stunt or a marketing demo. The findings were validated, reported, and patched. What matters more than the raw number is what this event signals, vulnerability discovery is no longer constrained by human throughput.

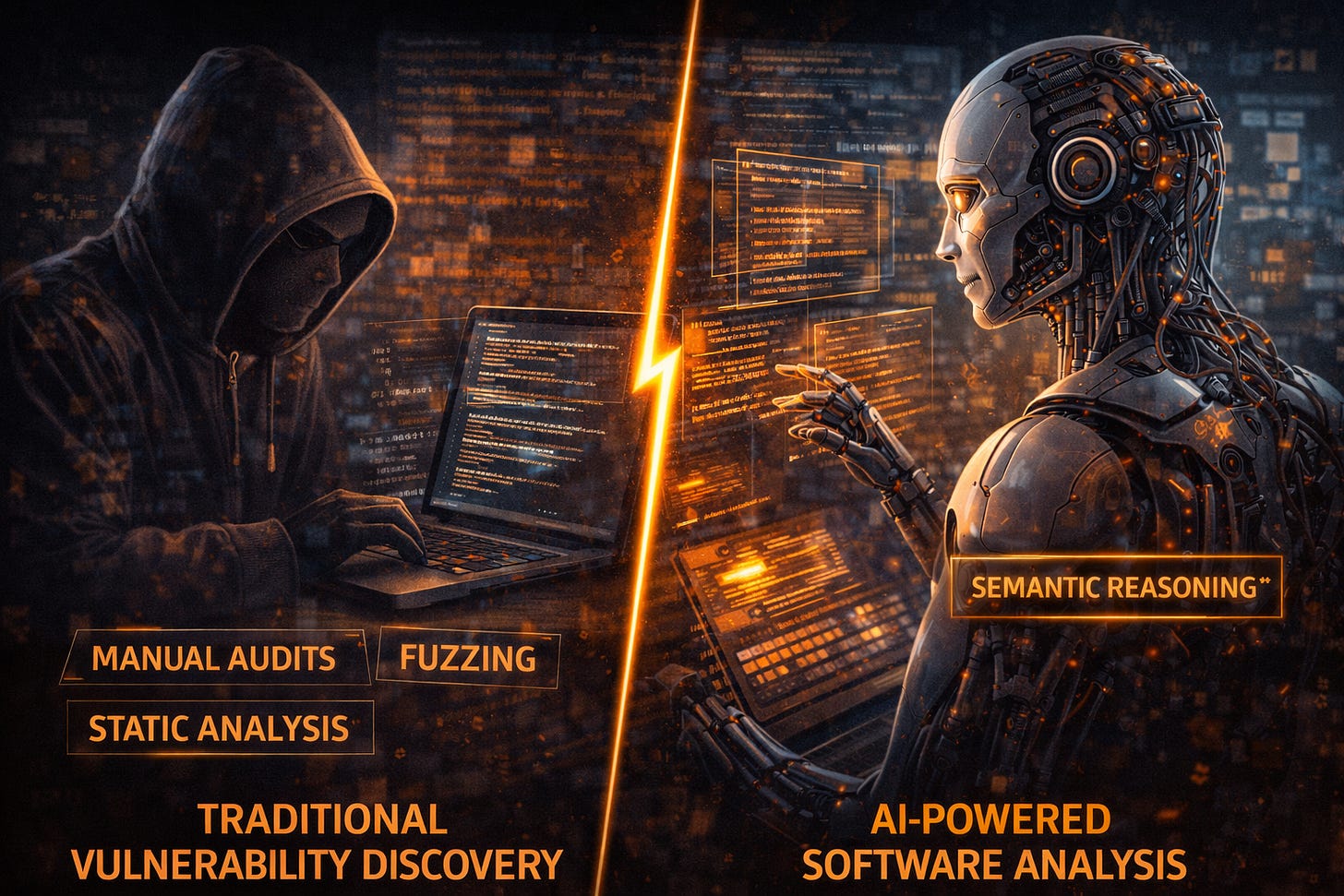

From Manual Audits to Machine-Scale Reasoning

For decades, software security has relied on a familiar mix, static analysis, fuzzers, manual audits, and the intuition of experienced researchers. These approaches work, but they do not scale cleanly across the modern dependency graph.

Claude Opus 4.6 changes that equation.

The model analyzed real production codebases and surfaced memory safety issues, bounds violations, and logic flaws in mature projects that have been reviewed for years. These were not obscure edge cases buried in experimental repositories. They were defects in core libraries embedded across countless downstream systems.

What distinguishes this from previous AI-assisted tooling is not automation alone, but reasoning. The model inferred how code should behave, compared that expectation to implementation, and flagged deviations that humans had either missed or deprioritized.

This is not pattern matching. It is specification inference at scale.

The Illusion of Open-Source Maturity

One of the most uncomfortable takeaways from this event is how many high-severity issues persisted in widely trusted open-source components.

These libraries were not neglected. They were maintained, patched, and deployed at scale. Yet hundreds of serious flaws remained undiscovered until an AI model examined them holistically.

This reinforces a long-standing reality in supply chain security, widespread usage does not imply deep assurance. Popular code accumulates fixes, not guarantees. Over time, complexity increases faster than human review capacity.

AI does not get tired. It does not context-switch. It does not assume previous reviewers were correct.

The Defender’s Advantage, and the Adversary’s Opportunity

It is tempting to frame this development as a defensive breakthrough, and it is. But it is also an offensive accelerant.

Any capability that reduces the cost of discovering vulnerabilities will eventually reshape attacker economics. The same reasoning that allows defenders to surface latent flaws can be repurposed to identify exploit paths, chain weaknesses, and prioritize targets at unprecedented speed.

The question is no longer whether attackers will use AI for vulnerability discovery. That threshold has already been crossed.

The question is who operationalizes it first, and at what scale.

Why This Changes Vulnerability Management

Most vulnerability management programs are reactive by design. They wait for disclosures, score CVEs, and triage based on exploitability and asset exposure.

AI-driven discovery disrupts that model entirely.

When unknown vulnerabilities can be surfaced proactively and continuously, the distinction between “known” and “unknown” risk begins to collapse. Organizations that integrate AI-assisted code reasoning into development and review pipelines gain visibility into flaws before they are assigned CVEs or weaponized.

Those that do not will increasingly find themselves patching after the fact, reacting to disclosures generated by others, including adversaries.

The New Baseline, AI-Augmented Security Engineering

Claude Opus 4.6’s findings are not an anomaly. They are a preview.

Software security is entering a phase where machine reasoning becomes a baseline capability, not a novelty. Human expertise remains essential, but it will increasingly shift toward validation, prioritization, and architectural decision-making rather than raw discovery.

The organizations that adapt will treat AI as a force multiplier for assurance, embedding it into code review, dependency analysis, and secure design processes.

Those that do not will face a growing asymmetry, more vulnerabilities, discovered faster, by actors with fewer constraints.

The Real Shift

This moment is not about one model or one vendor. It is about a structural change in how software risk is exposed.

For years, defenders assumed that the hardest vulnerabilities to find were also the least likely to be exploited. AI is eroding that assumption. Latent flaws buried in mature codebases are no longer safe by obscurity or reviewer fatigue.

The implication is stark.

Security is no longer limited by how much code humans can read.

And once that limit disappears, everything downstream changes.Source: https://red.anthropic.com/2026/zero-days/