When the Zoom Call Is the Malware: UNC1069 and the Industrialization of AI-Driven Trust Exploitation

We’ve crossed the line where AI is merely assisting phishing, and entered a phase where AI is embedded inside the social engineering infrastructure itself.

There’s a subtle but important shift happening in offensive tradecraft.

The recent UNC1069 campaign linked to North Korean operators targeting crypto and fintech ecosystems is not interesting because it uses deepfakes.

It’s interesting because it operationalizes trust as an attack surface.

And that’s a structural shift.

The Setup: Familiar Faces, Familiar Flows

The attack chain begins where most modern compromises now start, not with vulnerability scanning, but with access to an existing human network.

A compromised Telegram account.

A legitimate Calendly invite.

A Zoom meeting link that looks right.

Nothing in that sequence triggers classical defensive heuristics.

There’s no malicious PDF.

No obvious phishing lure.

No payload delivered in the first interaction.

Instead, the adversary inserts themselves into a workflow that already exists.

That’s the key.

The Meeting Is the Payload

Here’s where the tradecraft evolves.

Victims join what appears to be a legitimate Zoom session. In some cases, the “participant” is either:

A recycled recording of a real industry figure

Or an AI-assisted deepfake rendering

The nuance here matters less than the operational consequence:

Visual presence is no longer a trust signal.

For years, we’ve trained users to distrust links.

Now they have to distrust live humans.

That’s a psychological escalation.

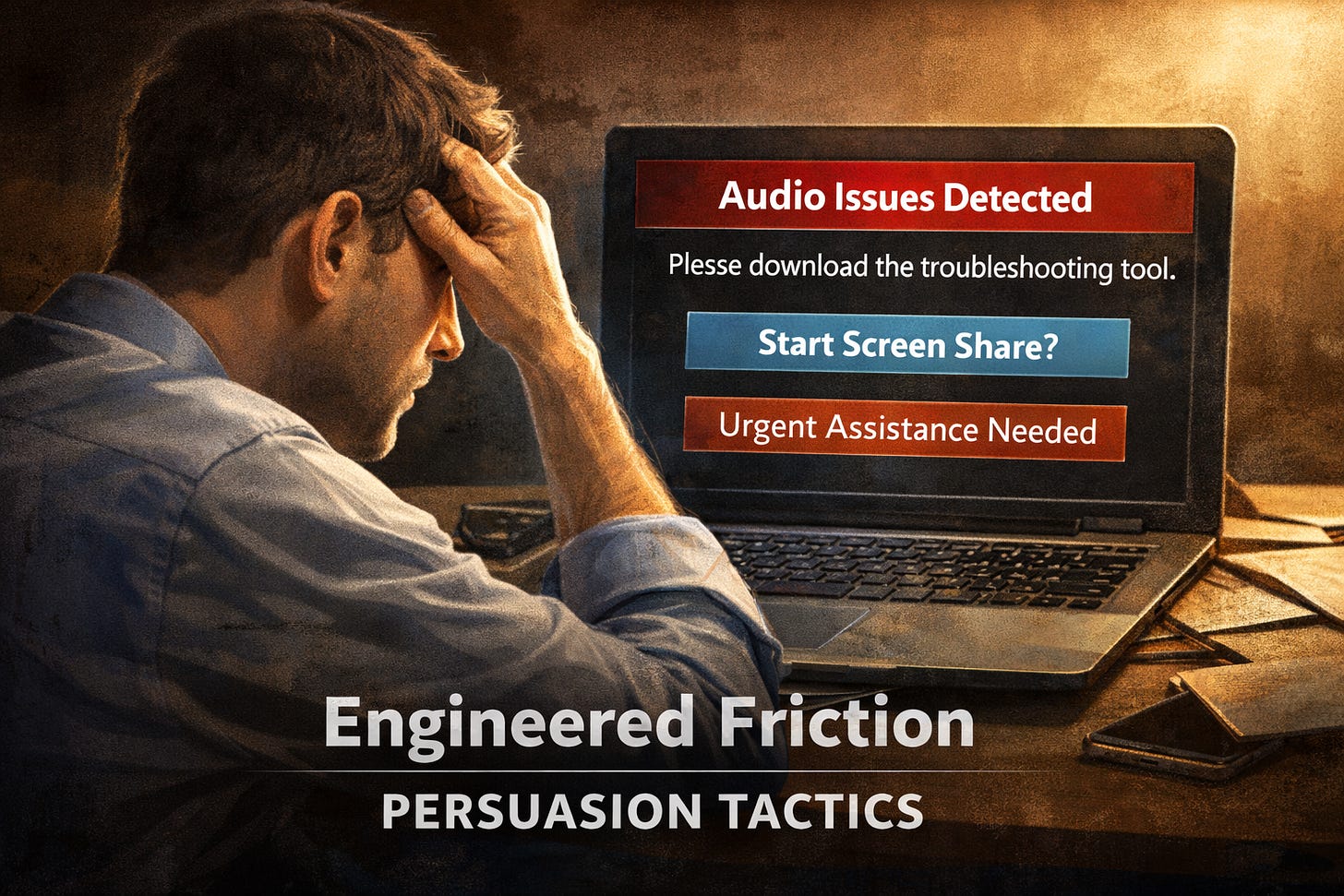

The Pivot: Engineered Friction

The infection vector isn’t brute force, it’s friction.

Audio issues.

“Can you download this troubleshooting package?”

Screen-share prompts.

Urgency layered with credibility.

This is persuasion engineering, not opportunistic phishing.

UNC1069 isn’t trying to trick the user.

They’re guiding them through a controlled decision path.

That distinction matters.

Why This Campaign Signals Something Bigger

This isn’t about crypto. It isn’t even about North Korea specifically.

It’s about the industrialization of persona manipulation.

Three strategic shifts are visible here:

1. AI as Interface Manipulation

We’ve focused heavily on AI generating content.

But the real leverage is AI generating presence.

Presence changes risk models. It lowers skepticism. It accelerates compliance.

That’s not incremental improvement, it’s asymmetry.

2. Identity Verification Is Officially Broken

Video is compromised.

Voice is compromised.

Message history can be compromised.

Which means the old mental model of “I saw them, so it’s real” is obsolete.

Most security stacks were not designed for adversaries who can manufacture social context on demand.

3. UX Is Now an Attack Surface

Calendar invitations.

Video conference platforms.

Collaboration tools.

The modern enterprise runs on workflow UX.

And that UX layer is now directly exploitable through AI-enhanced impersonation.

This blurs the line between social engineering and platform abuse.

The Strategic Objective

North Korean operations have historically prioritized revenue generation through cyber operations, especially in crypto ecosystems.

But what stands out here isn’t the financial targeting.

It’s the maturity of execution.

This campaign shows:

Coordinated persona compromise

Multi-stage malware deployment

Psychological sequencing

AI-assisted identity rendering

That’s operational discipline.

Not experimentation.

What Defenders Are Missing

Most organizations are still defending against:

Malicious attachments

Suspicious domains

Known malware signatures

But UNC1069’s real weapon isn’t a file.

It’s a believable interaction.

If your detection model begins after the file download, you’re already late.

The real detection layer needs to focus on:

Anomalous meeting context

Behavioral deviations in executive workflows

Cross-platform identity inconsistencies

Unexpected tool invocation inside live collaboration sessions

That’s uncomfortable territory because it pushes security into human behavior monitoring.

The Bigger Pattern

We’re watching the collapse of passive trust signals.

Email headers failed.

Caller ID failed.

Now video presence is failing.

Each time a signal collapses, attackers gain temporary asymmetry until defenders adapt.

The difference now?

AI accelerates that collapse.

Final Assessment

UNC1069’s campaign isn’t just another crypto theft operation.

It’s a preview of what happens when generative AI becomes a scalable impersonation engine.

The meeting is the lure.

The persona is the exploit.

The workflow is the delivery channel.

And the attack doesn’t begin with malware.

It begins with confidence.

That’s the shift.